What is immersive audio?

Immersive audio employs a range of channel- based, object-based and scene-based methods to provide an exceptional listening experience. So immersive /spatial audio generally falls into three categories: channel-based audio (CBA), object-based audio (OBA), and scene-based audio (SBA), mostly mixture of them. So immersive audio is CBA, plus OBA now, and for future, it should be CBA, plus OBA, plus SBA.

Channel-Based Audio

CBA represents the most straightforward approach to spatial audio, though it lacks immersion. At its core, CBA begins with a basic two-speaker stereo setup. Introducing a third speaker positioned centrally can enhance the audio experience by serving as an anchor, thereby improving the stereo experience for listeners who aren’t situated in the ideal spot. The most prevalent CBA technology currently in use is surround sound. This technology expands the audio experience by incorporating additional speakers arranged in a two-dimensional (2D) horizontal plane around the listener, enabling sound to emanate from the front, sides, and rear. A widely recognized surround sound format is 5.1, which includes five primary speakers that handle the left, right, and center front channels, as well as the right and left rear channels, complemented by a single subwoofer typically placed at the front. Surround sound can also be “virtualized” using a sound bar configuration positioned in front of the listener.

Object-Based Audio

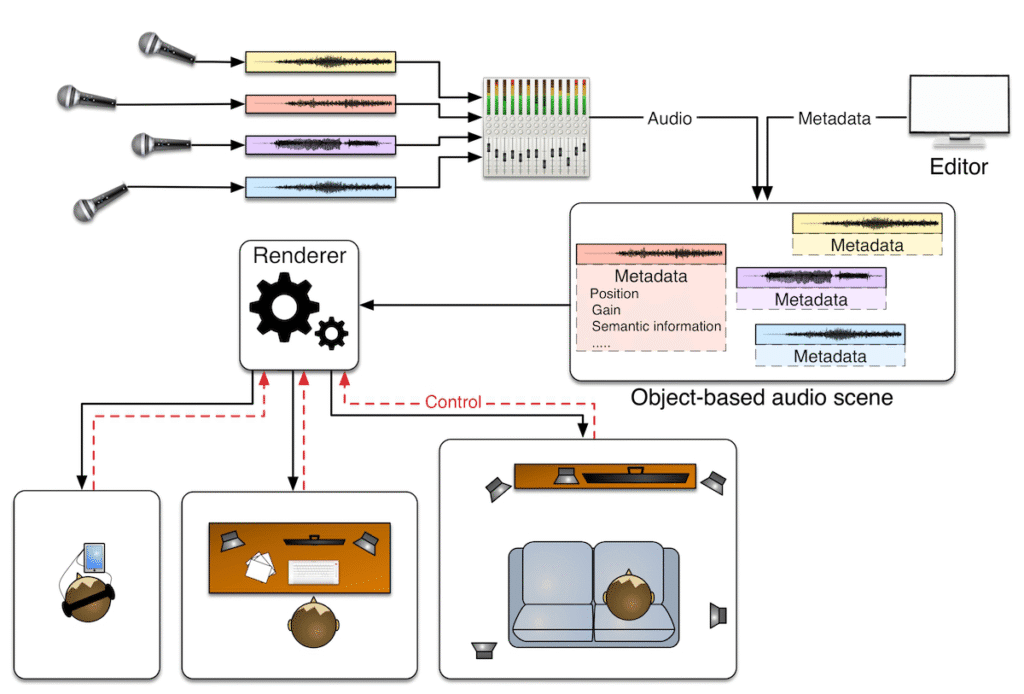

OBA is a system where audio ‘objects’ like voices, individual instruments, sound effects, and so on are stored in their audio files, usually with related metadata that defines their levels, locations, panning, and other characteristics. In addition, OBA includes the concept of scenes to represent the metadata of the objects, which is neutral relative to the output audio format and system. The renderer puts everything together. It uses the metadata to mix the audio objects based on the devices, like speakers versus headphones, and the layout, stereo, 5.1, 7.1, etc.

Because the audio objects and the related metadata are individually passed through to the renderer, OBA gives listeners more opportunities to control the listening experience. When the object-based audio scene is created, the listener can turn up the volume on certain objects, turn off objects (like turning off the commercials), and even choose a different language for dialog or subtitles (Figure 2).

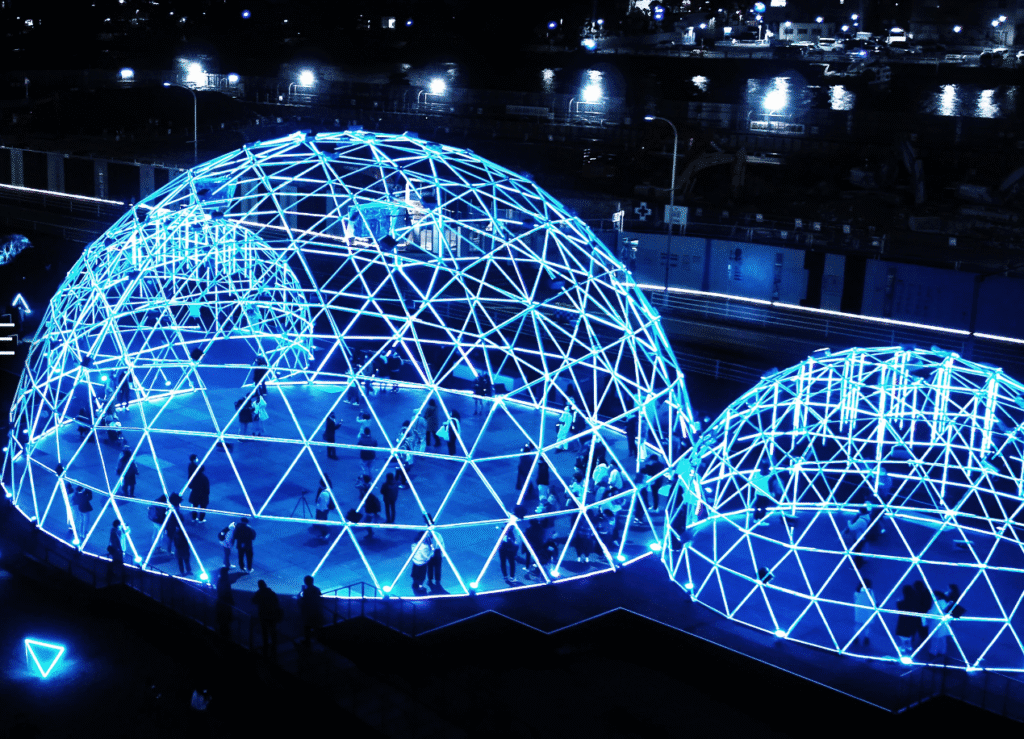

Scene-Based Audio

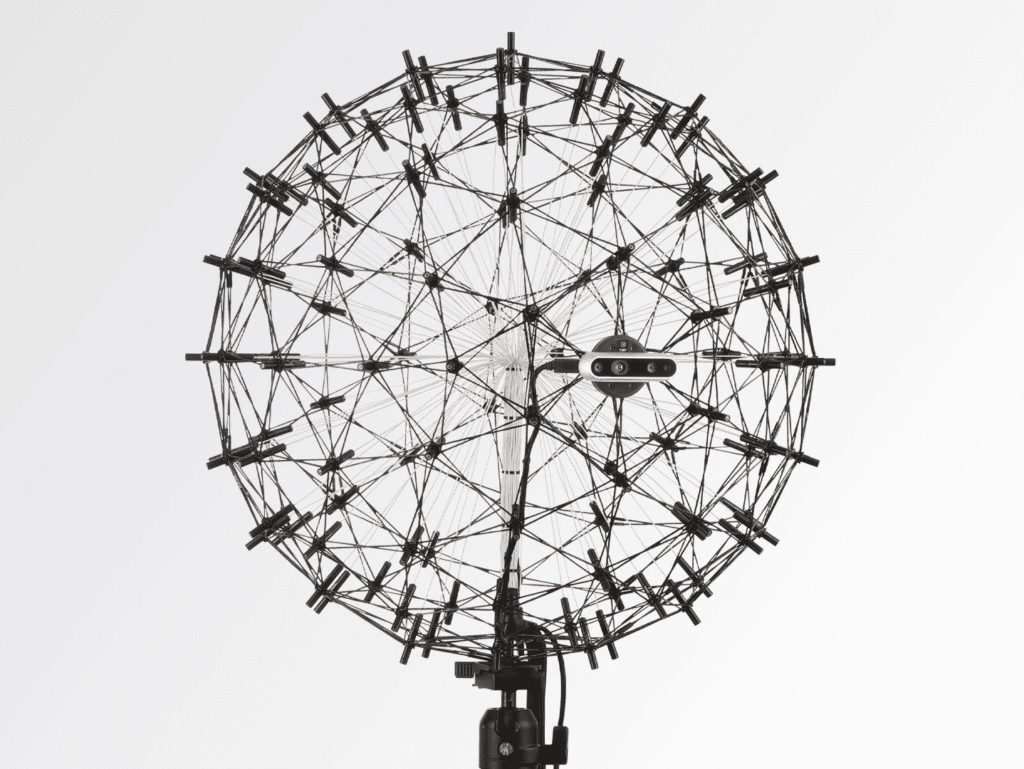

SBA uses higher-order ambisonics (HOA) to represent sound in a fully spherical surround format. Using HOA, sound can be reproduced at a specific point in 3D space. HOA requires more microphones, and the microphones are more complex. An ambisonics microphone capsule comprises four independent microphones that can capture sound as cardioid polar patterns. SBA is more complicated than OBA. It can employ vector-based panning, intensity panning, delay panning, the doppler effect, and so on to precisely place the sound and move it around in 3D space to create a fully immersive experience.

SBA is well suited for use in virtual reality (VR) and augmented reality (AR) environments. It supports positional audio that dynamically adjusts the sound field based on the listener’s head position relative to the 3D virtual world. SBA can be delivered using conventional speakers, VR/AR goggles, etc.

Multi-room Streaming Amplifier with Immersive Audio

OpenAudio AVR-16200 is based on HOLOWHAS Ultra/Plus/Max, and support both Dolby ATMOS and HOLOSOUND immersive audio technology. Both ATMOS and HOLOSOUND support CBA and OBA.